As part of the Quantum Chaos course (offered by Prof Pragya Shukla) during my MSc in Physics at IIT Kanpur, we were supposed to make a report and a presentation on any relevant paper. I chose the review paper titled “A Mini Introduction to Information Theory” by Prof Edward Witten of Institute for Advanced Study, Princeton. Below is the report:

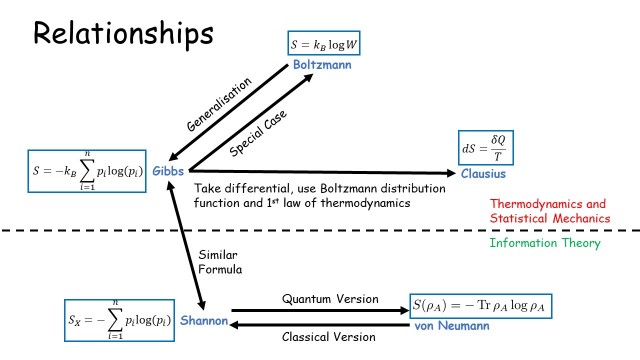

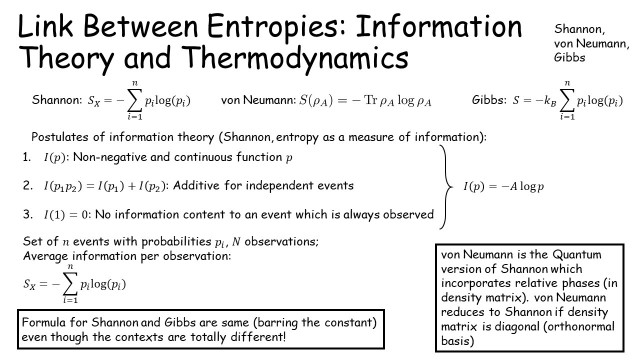

I also gave a presentation on this topic. In the presentation, after giving basic definitions from the paper, I have tried to establish the connections between the various kinds of entropy quantities that have been defined. We encounter the word “entropy” in many different contexts in thermodynamics, information theory and I have put together these seemingly different definitions of entropies into a chart describing their relation.

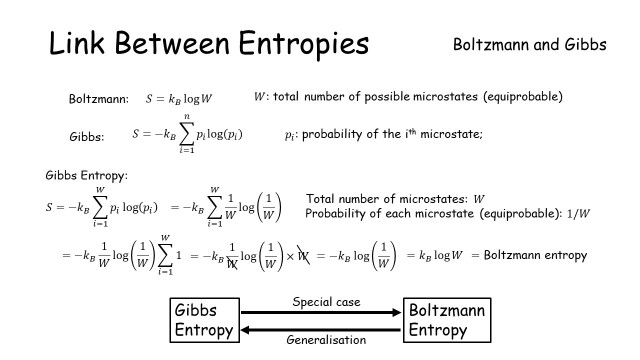

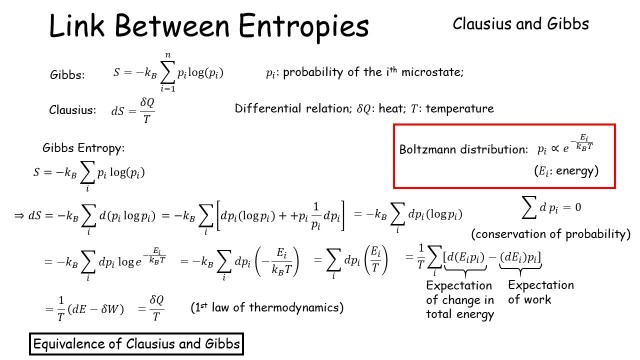

Here are the proofs for the connection between the various entropy quantities mentioned in the above image:

And finally, here is the presentation uploaded on my YouTube channel:

Leave a Reply